When AI Meets Politics: Unpacking the Arrest Over Alleged AI-Generated Threats in Canada

Sarah Johnson

December 6, 2025

Brief

Analyzing the arrest of a Canadian politician amid AI-generated threatening voicemails, this analysis explores legal, ethical, and political implications of AI’s emerging role in undermining democratic norms.

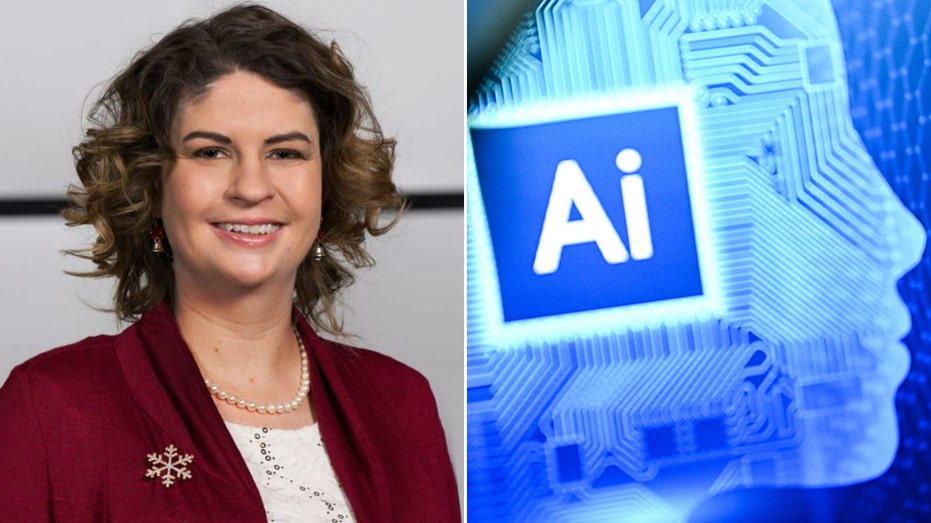

Why the Arrest of a Canadian Politician Over an Alleged AI-Generated Threat Matters

The recent arrest of Ontario Councilor Corinna Traill, following allegations that she left an intimidating voicemail threat synthesized with artificial intelligence (AI) technology, highlights a pivotal intersection of politics, technology, and legal frameworks. This case is not merely an isolated incident of political scandal; it illustrates growing challenges in governance and criminal justice caused by the rapid evolution of AI tools that can mimic human voices convincingly. Beyond the sensational headlines, this story raises critical questions about accountability, disinformation, and the readiness of democratic institutions to cope with AI’s disruptive potential.

The Bigger Picture: A New Frontier in Political Malfeasance

Historically, political intimidation and smear strategies have often been documented, but the tools for enacting them tended to be direct and tangible—phone calls, letters, even public statements. Traill’s claim that portions of a threatening voicemail were artificially generated marks a significant shift, echoing earlier concerns about "deepfake" technology in video and audio potentially weaponizing misinformation.

The concept of AI-generated speech threatening a political opponent recalls growing anxieties worldwide about AI’s misuse in electoral processes, from fake social media personas to synthesized videos aimed at discrediting opponents. Since the 2010s, social media interference and digital propaganda have eroded traditional electoral norms; now, AI threatens to accelerate these risks by making evidence falsification more intuitive and harder to detect.

What This Really Means: Legal and Ethical Quagmires Ahead

At its core, this case forces legal systems to grapple with questions never fully contemplated before. If portions of the threatening message are AI-generated, who bears legal responsibility? Traill herself, her team, or an unidentified malicious actor? Her denial, coupled with the claim that AI was involved, could reflect either a novel defense tactic or a genuine warning about emerging technology vulnerabilities. The ambiguity complicates prosecution and raises challenges for law enforcement techniques heavily reliant on forensic linguistic and voice analyses.

Moreover, the ethical implications for democratic participation are profound. Intimidation undermines public trust in elections, and if AI can facilitate such tactics anonymously or through plausible deniability, it could severely deter civic engagement. This dynamic might embolden bad actors to weaponize AI against political challengers, eroding the principle that public offices are contested fairly and transparently.

Expert Perspectives

Dr. Emily Chen, a scholar specializing in AI ethics at the University of Toronto, emphasizes, "This case underscores the urgent need for clear legal definitions regarding AI-generated content in political contexts. Current legislation lags behind technology rapidly advancing capabilities to impersonate individuals with near-perfect accuracy." Similarly, Robert Mason, a former cybercrime investigator, notes, "Voice synthesis technology complicates evidence authentication in criminal investigations. We must invest heavily in technological countermeasures to detect AI-manipulated speech to ensure justice is served fairly."

Data & Evidence: The Growing Rise of AI-Facilitated Disinformation

Recent studies reveal a surge in AI-generated content that spreads misinformation: OpenAI reported that by late 2024, millions of synthetic audio clips were circulating online weekly, with a small but rising fraction weaponized for harassment and deception. Moreover, a 2023 Pew Research survey found 65% of respondents worried about AI deepfakes impacting trust in media and political communication. These trends signal a dangerous trajectory where trust in political discourse may erode further.

Looking Ahead: What To Watch For

This case will likely become a bellwether for how jurisdictions address AI’s role in election-related crimes. Observation points include:

- Judicial precedents: How courts interpret evidence involving AI-synthesized speech.

- Legislative reforms: Whether new laws explicitly criminalize AI-generated political threats or misinformation tactics.

- Technological defenses: Advances in forensic tools to detect AI manipulation in voice and video communications.

- Political ramifications: Impact on candidate safety, campaign tactics, and public confidence in elections.

It will also be critical to watch how politicians, political parties, and regulatory bodies respond to balance innovation benefits against risks posed by AI to democratic integrity.

The Bottom Line

The arrest of Corinna Traill underscores the transformative challenges AI poses in political and legal domains. It spotlights urgent questions about accountability when synthetic media enables threats and misinformation and signals a pressing need for updated legal frameworks and technical expertise. As AI tools grow more accessible and sophisticated, societies must prepare robust responses to protect democratic processes and ensure that technology empowers rather than undermines public trust.

Topics

Editor's Comments

This incident marks a dangerous new development where AI technology intersects with political conflict, challenging traditional notions of proof and accountability. It prompts us to rethink how democratic institutions respond to technologies that can distort truth and weaponize intimidation. Importantly, it raises broader questions about how societies can safeguard democratic participation in an era when AI-generated misinformation can easily undermine electoral integrity. Policymakers, technologists, and civil society must urgently collaborate to build resilient defenses against this new form of political manipulation.

Like this article? Share it with your friends!

If you find this article interesting, feel free to share it with your friends!

Thank you for your support! Sharing is the greatest encouragement for us.